Evaluate multiple modeling approaches for #TidyTuesday spam email

Use workflowsets to evaluate multiple possible models to predict whether email is spam.

Machine learning, text analysis, and more

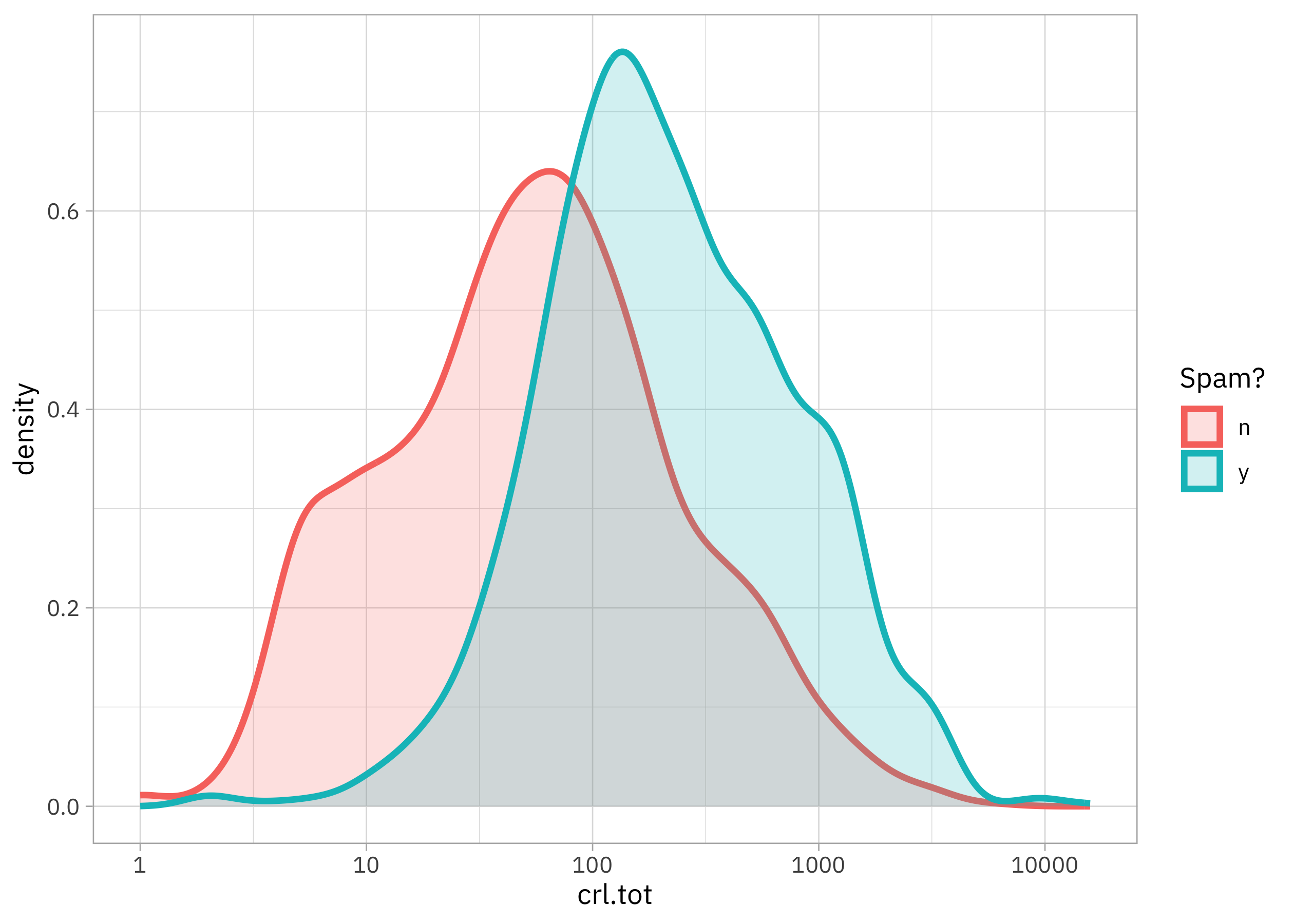

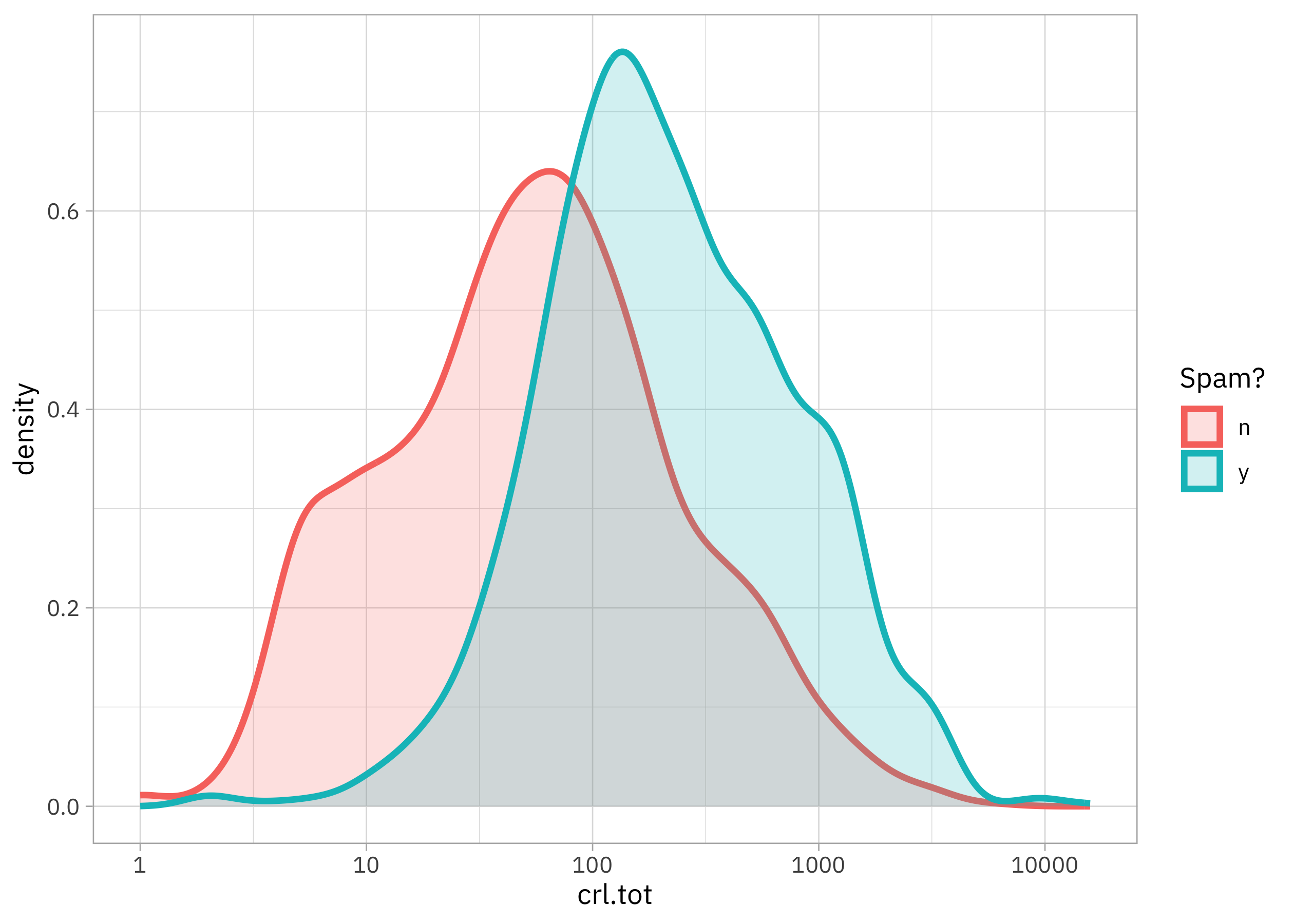

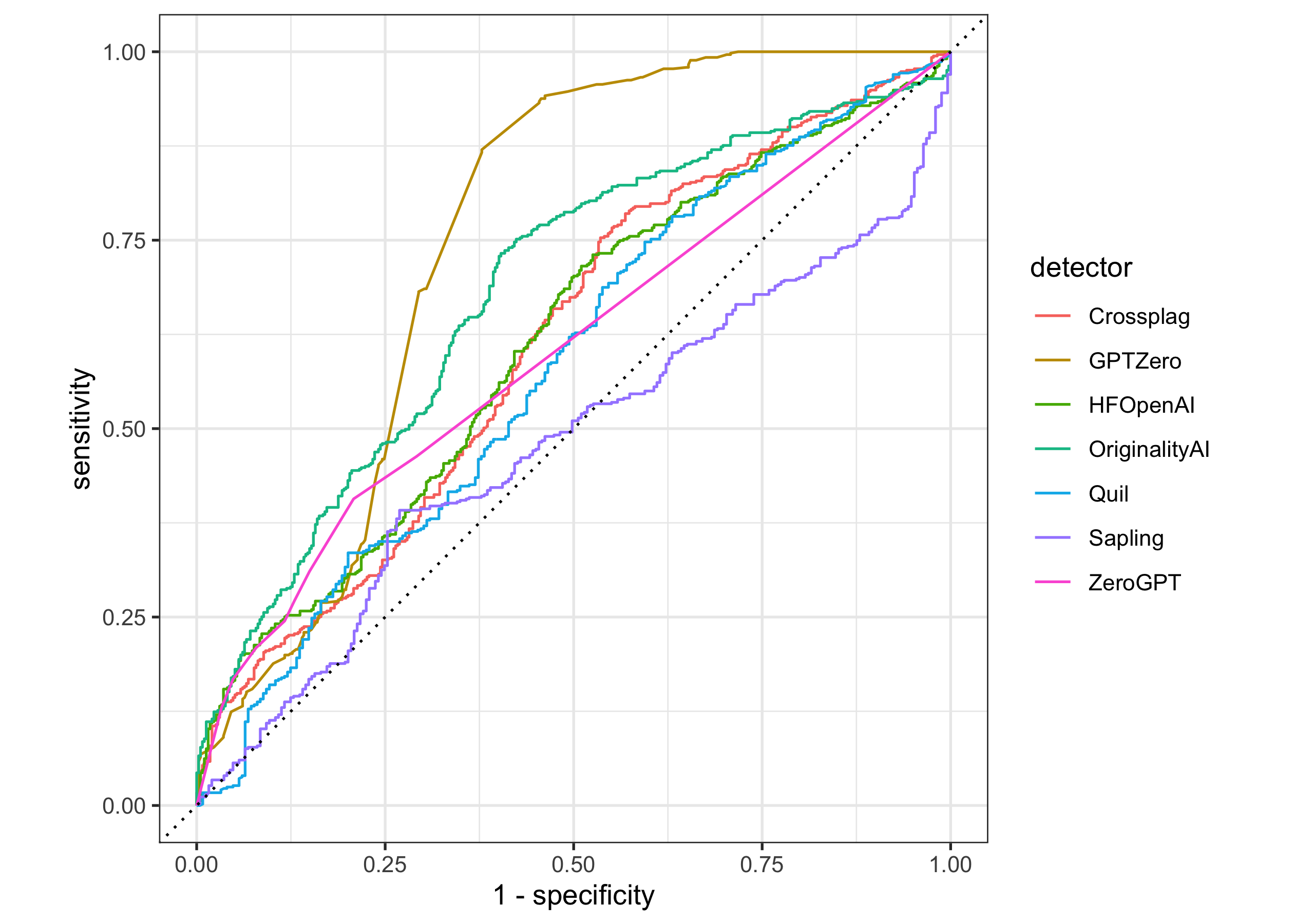

Use workflowsets to evaluate multiple possible models to predict whether email is spam.

Learn about different kinds of metrics for evaluating classification models, and how to compute, compare, and visualize them.

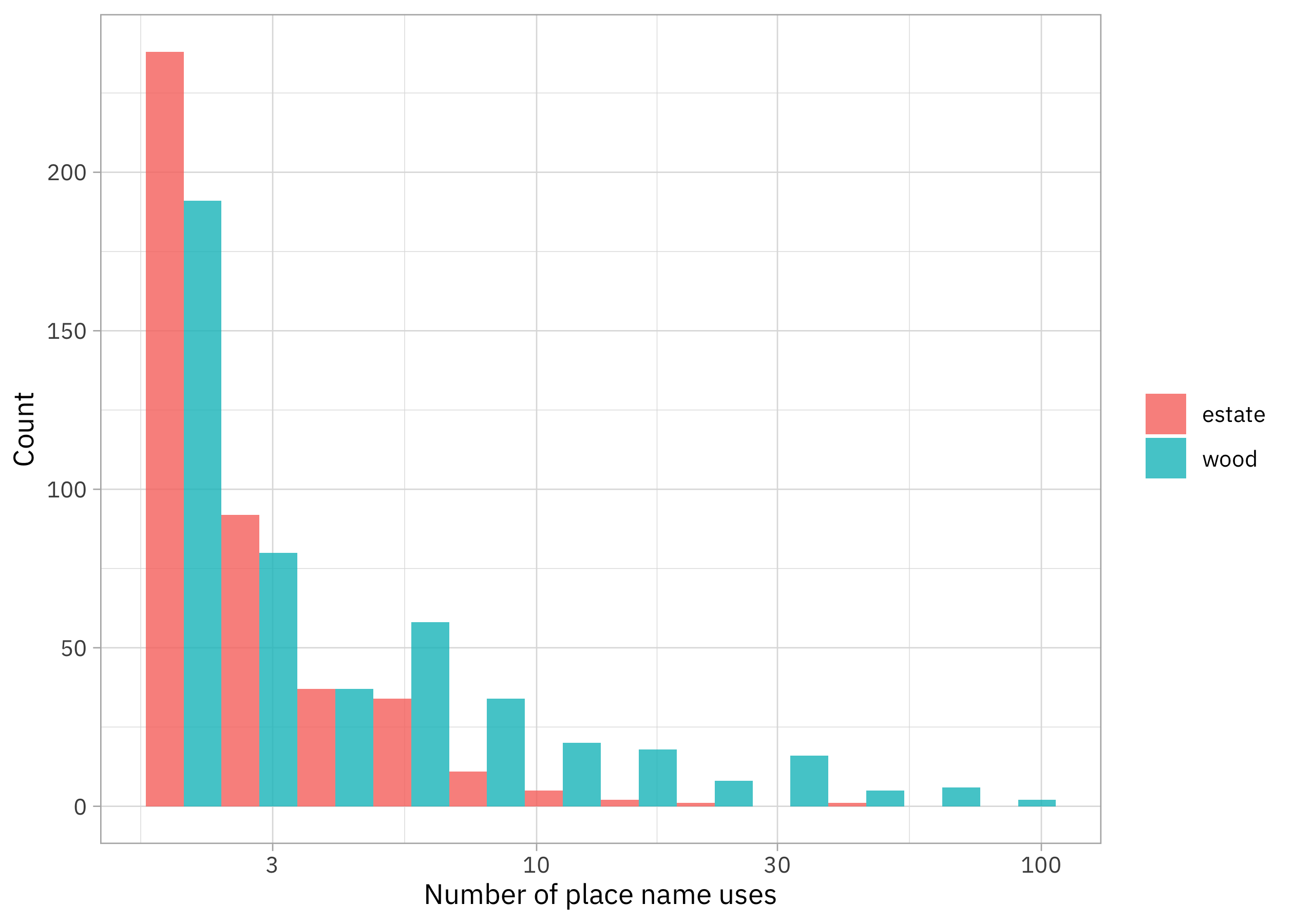

Let’s use byte pair encoding tokenization along with Poisson regression to understand which tokens are more more often (or less often) in US place names.

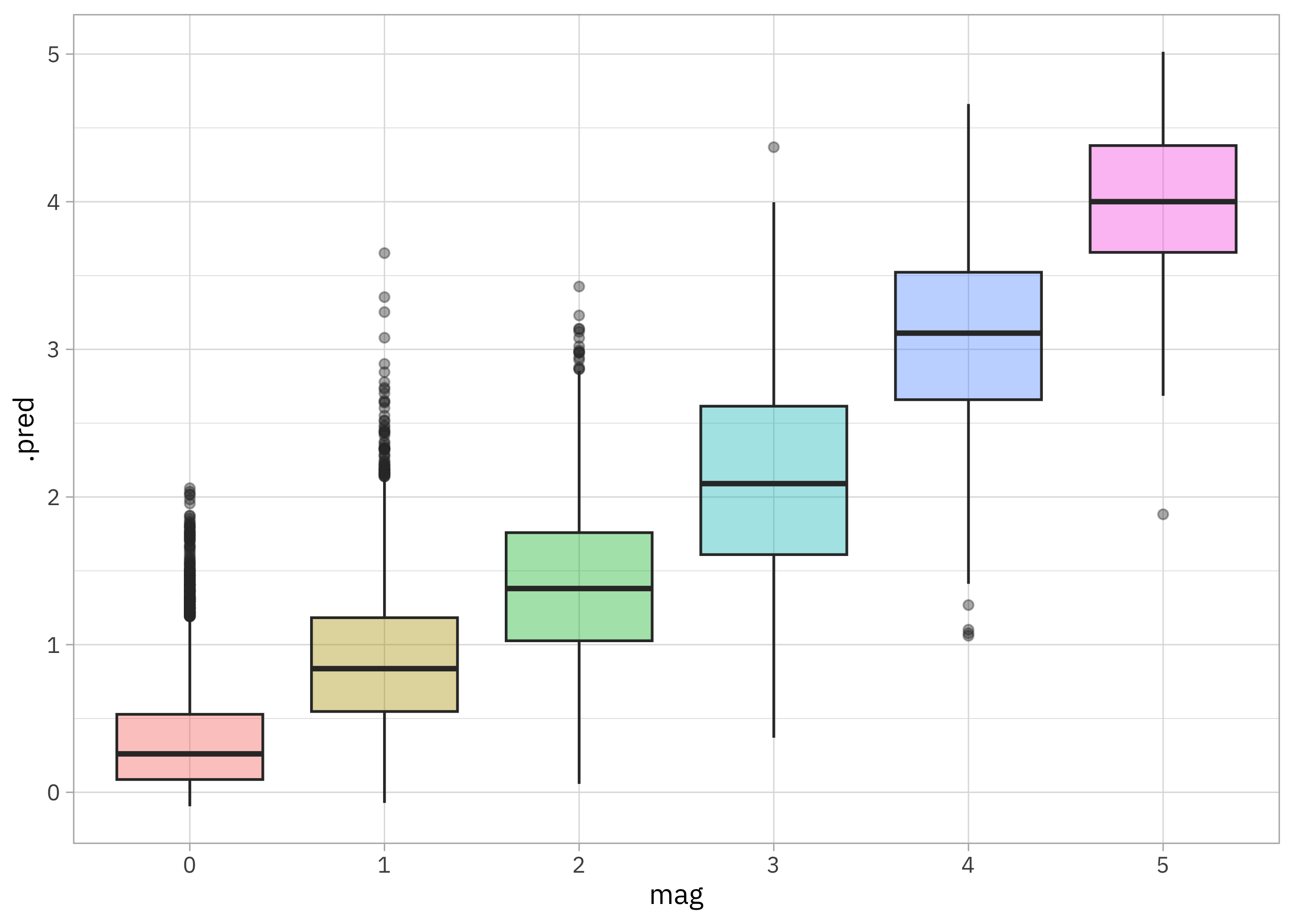

How well can we predict the magnitude of tornadoes in the US? Let’s use xgboost along with effect encoding to fit our model.

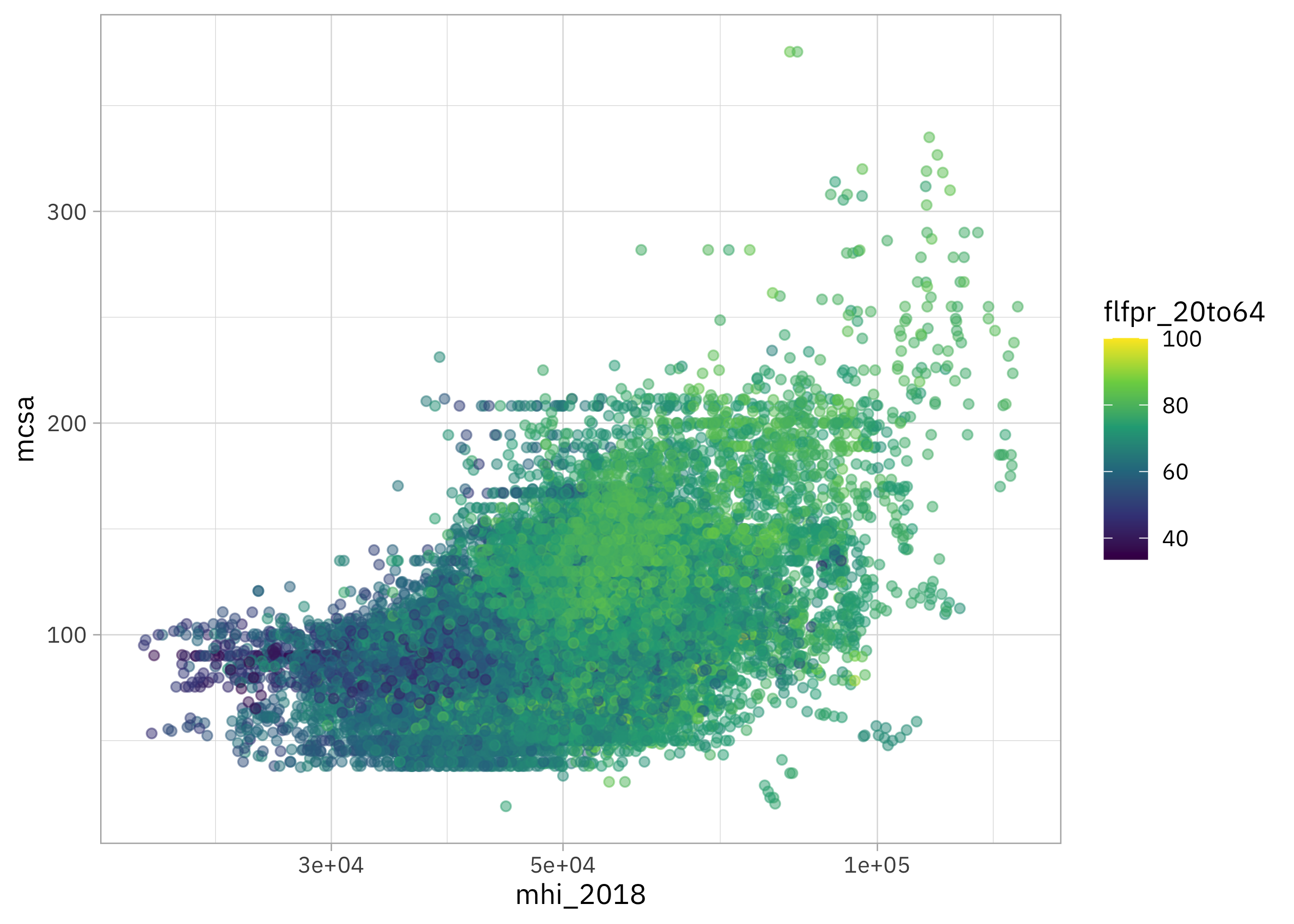

Can we predict childcare costs in the US using an xgboost model? In this blog post, learn how to use early stopping for hyperparameter tuning.